Best Practices in Prompt Strategy & Prompt Engineering: A Real-World AI Storyboarding Case Study

This in-depth exploration of prompt engineering best practices showcases a real-world AI storyboarding project. We'll demonstrate how applying clear strategies and iterative refinement techniques significantly enhances the quality and coherence of AI-generated narratives.

In 2023, as demand for AI surged, we transitioned from R&D to delivering client-focused AI solutions. This journey presented unique challenges, allowing us to develop best practices in prompt strategy and prompt engineering.

In this article, we share these best practices that enhanced the coherence and quality of AI-generated outputs, illustrated through a real-world case study of an AI tool automating storyboard creation in video production. By interviewing users, generating scripts, creating storyboards, and compiling them into a demo, this tool streamlined workflows, bridged communication gaps, and became a fully automated, impactful solution for our client.

Lesson 1: Defining a Prompt Strategy Early and Refining It Through Iteration

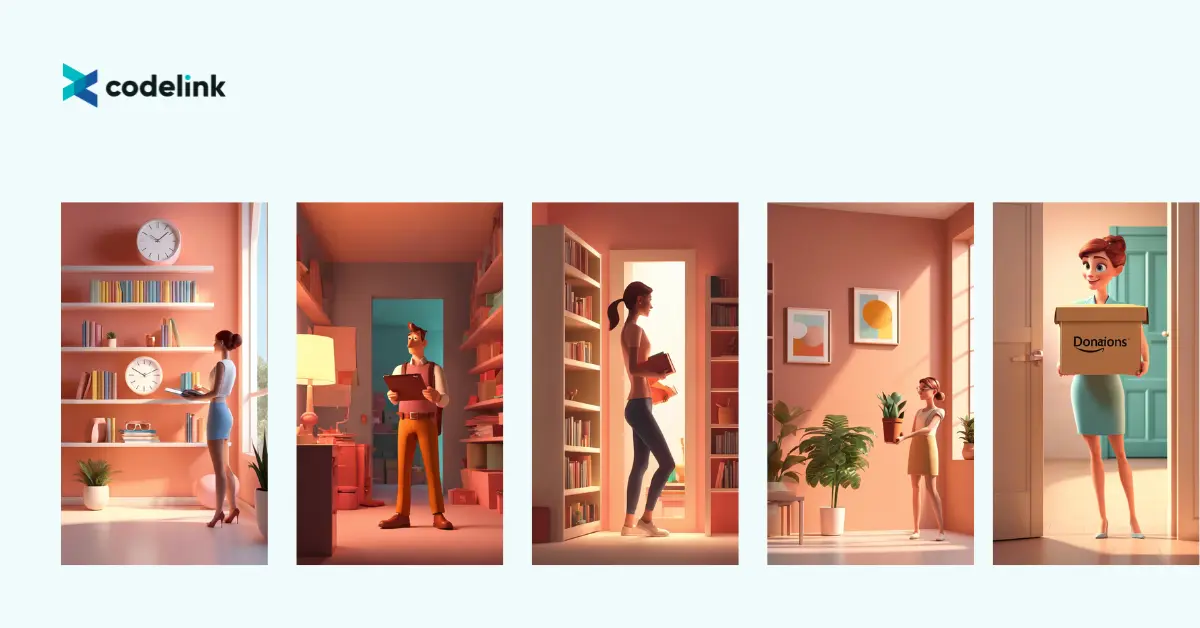

A successful project always begins with a well-defined strategy. In this project, ensuring coherence between the script and visuals was critical, as the expected output was a storyboard. Storyboards play a critical role in video production, serving as a blueprint for storytelling that aligns visuals with the narrative.

The challenge is that unlike language models, which can effortlessly recall past prompts or inputs by leveraging the conversation history (context), image models cannot reference previously generated images. Each image description functions as a standalone prompt, making it tough for these models to produce visuals that maintain consistent elements, characters, or settings that demonstrate slight changes between scenes to effectively tell a cohesive story through the generated images.

Our team addressed these challenges by proactively defining strategies at the outset, then refining them iteratively to effectively test and develop a complicated AI pipeline that ensures cohesive and high-quality outputs.

Early-Stage Prompt Strategy Development

At the outset, we worked closely with the client to understand their requirements and define clear project goals. This understanding allowed us to structure the workflow in a step-by-step manner, mirroring how a human would approach this work. We began by identifying which parts could be addressed through logical coding, which should be handled by AI, and how to integrate them seamlessly.

We developed an actionable plan for each strategy, starting with high-level testing to gather overall outcomes. Various models were evaluated using straightforward prompts, assessing their compatibility with the project for every strategy or approach.

In discussing this project, we tackled the challenge of maintaining consistency across visuals by defining and testing two distinct approaches:

1. Approach 1: Context-Independent Image Descriptions

- Images were generated for each scene using standalone prompts based on each sentence of the voiceover script.

- This approach offered faster generation times but led to repetitive visuals without contextual continuity and needed more narrative progression, though we expected that the images would be relevant to what was presented in the script.

2. Approach 2: Storyline-Driven Image Descriptions

- A cohesive storyline was developed, with detailed image descriptions guiding the model for each scene.

- While this approach ensured visual consistency and narrative alignment, generation times were slower because each step had to be executed sequentially rather than asynchronously.

Having tested various strategies and analyzed the results, we gained a rough idea of the likely outcomes for each approach. This foresight enabled us to anticipate potential challenges, establish clear expectations, and collaboratively define the strategy best suited to the project’s goals.

After testing and analyzing the results, we presented a comprehensive overview of the strengths and limitations of each approach to the client, enabling them to make an informed decision on the strategy to move forward.

Based on the results, the client and our team aligned on the strategy best suited to the project’s goals. Before delving into detailed execution stages, we collaborated with the client to define crucial elements, including:

- Models to use.

- Workflow order.

- Parameters to test.

- Levels of prompt detail.

- Testing methods, either isolated or integrated into the entire project flow.

By defining prompt strategies early on, we built a strong foundation that set clear expectations, minimized deviations during implementation, and ensured the project stayed on track to meet its goals.

Iterative Refinement with Alignment to Prompt Strategy

After finalizing the prompt strategy, we prioritized iteratively refining each step to enhance the generated results while staying aligned with the agreed-upon strategy. We also maintained a collaborative workflow with the client, regularly sharing updates, insights, and adjustments to keep everything on track and everyone on the same page.

To improve the results at each stage in the pipeline, our first intuitive approach was refining and testing prompts iteratively. By adding details and requirements—emphasizing what we wanted to achieve and what to avoid—we could guide the AI toward more accurate and consistent outputs.

As we progressed through each stage, certain tasks proved too complex for the AI to handle effectively. To address this, we devised a solution by dividing each complex task into many smaller steps. For example, generating visuals for an entire scene at once often led to inconsistencies. To address this, we divided the narrative generation step into smaller, manageable segments:

- Generating a cohesive story.

- Splitting the story into illustratable scenes.

- Defining key visual elements for each scene.

Additionally, throughout implementation, we refined model configurations iteratively to improve output quality at every stage:

- Language Model (GPT): Adjusted parameters like temperature for greater script coherence and contextual accuracy.

- Image Generation Model (Stable Diffusion): Fine-tuned style settings and prompts to ensure better representation of evolving settings and character details, enabling seamless transitions between narrative elements.

Each refinement was guided by observed results and client feedback, ensuring steady progress and better alignment with the project goals. This iterative approach not only enhanced the quality of our outputs but also contributed to more effective AI-driven workflows.

Key Takeaways

1. Defining clear prompt strategies at the beginning of the project ensures alignment with overarching objectives and minimizes deviations during implementation. This proactive approach helps streamline workflows and actionable plans, saving time and effort in later stages.

For example, early testing with straightforward prompts allowed the team to evaluate various models and refine the strategy based on tangible outcomes. This foundational clarity not only reduced potential missteps but also created a shared understanding of the project’s direction, fostering efficiency and precision throughout execution.

2. Don't expect the AI model to handle complex tasks all at once. During iterative refinement, we found that for tasks requiring multiple instructions or steps, it's beneficial to break them into smaller subtasks. Smaller prompts not only enhance the model's response quality but also increase controllability, simplify debugging, and boost accuracy.

For instance, by first generating a simple script and then layering in visual details, we enhanced both clarity and coherence in the final output. This approach also allowed us to refine each subtask independently, ensuring high-quality results.

Learn more about these key insights and best practices from the following references: Google Vertex AI - Breaking Down Prompts and OpenAI - Guide to Prompt Engineering.

Lesson 2: Overcoming Inconsistencies in AI Outputs

Inconsistencies initially hindered the visual and narrative flow of AI-generated images. Through iterative refinement, we identified and applied best practices in prompt engineering to overcome inconsistencies, ensuring that images aligned with the storyline while resonating as a unified, engaging experience.

Achieving Image Style Harmony

Generating image prompts for each scene individually initially led to noticeable style discrepancies. To address this, we utilized a detailed and standardized style template (e.g., line drawing style, vector style, photorealistic style) for the image generation model. This approach ensured that all images adhered to a cohesive style, significantly improving narrative engagement.

Overcoming Randomness of Characters

Inconsistent character appearances disrupted the narrative flow. During the early image generation preparation phase—when image descriptions were generated—we incorporated unique identifiers, including names and descriptive attributes (eg: names, age, ethnicity, clothing) into image generation prompts. These identifiers were consistently applied across scenes.

Additionally, prompt weights helped emphasize important elements. High prompt weights were assigned to key identifiers (e.g., “teenage high school student in red sweater”), while smaller weights were assigned to style and color keywords to allow the model to focus on generating the scene.

Prompt examples:

- Scene 1: Line art, minimalistic, teenage high school student in a red sweater, student walking to university.

- Scene 2: Line art, minimalistic, teenage high school student in a red sweater, student eating at cafeteria with friends.

Ensuring Color Cohesion

Color inconsistencies gave each scene from the storyboard a disjointed feel, as though they belonged to different videos. To resolve this, we asked the language model to generate a cohesive color palette, including accent, highlight, and shadow colors. The color palettes were then integrated into image generation prompt templates, ensuring a harmoniously consistent color theme throughout.

Key Takeaways

1. Standardizing Prompt Templates: Consistent prompt templates were key to ensuring visual harmony across the storyboard. These templates keep specific elements of the prompt unchanged—such as recurring characters, styles, objects, color palettes, or settings. By adopting prompt templates, we preserved uniformity across generated visuals, even as other components evolved in line with the narrative progression.

For reference on designing effective prompt templates, you can visit MidJourney Prompt Guide, Stable Diffusion 3.5 Prompt Guide, and Learn Prompting: Image Prompting.

2. Advanced Prompt Techniques

2.1 Fine-tuning Prompt Weights: Highlight critical elements (e.g., main content) with high weights, assign smaller weights to less critical elements (e.g., styles), and use negative weights to exclude unwanted components. This ensures the model focuses on what’s most important.

2.2 Crafting Descriptive Prompts: The key to enhancing control over a model’s output lies in the use of explicit and detailed prompts, resulting in more thorough and accurate generated images. According to best practices in prompt engineering, such as those outlined by OpenAI and Google Vertex AI, the more specific and comprehensive the description, the better the model can produce relevant and accurate outputs. Clear and descriptive instructions ensure that the AI consistently generates visuals that align with the intended narrative and maintain coherence across the video.

We meticulously incorporated essential elements that required consistency into our template prompts, focusing on detailed descriptions of recurring characters, objects, settings, etc. Simultaneously, we varied specific scene descriptions in the prompts so that each image depicted a different scene in the storyline while maintaining overall visual harmony & consistency.

Through meticulous prompt engineering, including standardizing templates, fine-tuning prompt weights, and crafting detailed descriptions, we address inconsistencies in style, character representation, and color cohesion. This deliberate, step-by-step optimization not only elevated the quality and coherence of AI-generated visuals but also demonstrated the critical role of structured refinement in achieving a unified and compelling visual experience.

Conclusion

Our work on the Automated Storyboarding Tool highlighted the importance of strategic planning, meticulous prompt engineering, and collaborative refinement. These best practices not only resolved technical challenges but also ensured client satisfaction and a streamlined workflow.

As we look to the future, we aim to explore new AI models and techniques to push the boundaries of what's possible in generative AI.

Minh Phan

AI Engineer

Minh is a talented AI/ML Engineer with two years of specialized AI experience, excels in fields like Natural Language Processing (NLP), Computer Vision (CV), and Large Language Models (LLM). She adeptly applies her AI expertise to diverse platforms, enhancing industries with intelligent, scalable solutions.